Abstract

TL;DR: A new method for aerial visual localization using LoD 3D map with neural wireframe alignment.

We propose a new method named LoD-Loc for visual localization in the air. Unlike existing localization algorithms, LoD-Loc does not rely on complex 3D represen- tations and can estimate the pose of an Unmanned Aerial Vehicle (UAV) using a Level-of-Detail (LoD) 3D map. LoD-Loc mainly achieves this goal by aligning the wireframe derived from the LoD model projection with that predicted by the neural network.

Thermal Infrared Dataset (New)

We additionally collected thermal infrared queries with annotated poses for LoD3.0 dataset. The thermal infrared queries could be download by send request to the Email: zhujuelin@nudt.edu.cn.

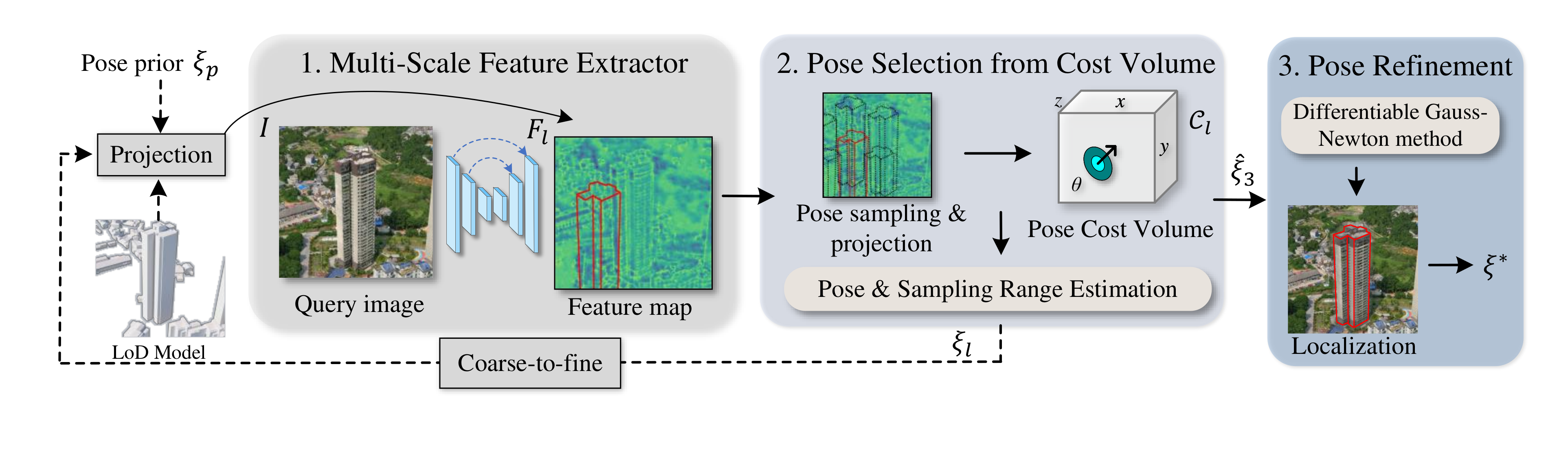

Pipeline overview

Overview of LoD-Loc. 1. The method uses a CNN to extract multi-level features $\mathbf{F}_l$ for the query image $\mathbf{I}$. 2. A cost volume $\mathcal{C}_l$ is built for various pose hypotheses sampled around the coarse sensor pose $\boldsymbol{\mathcal{\xi}}_p$ to select the pose $\boldsymbol{\mathcal{\xi}}_l$ with the highest probability, based on the projected wireframe of the 3D LoD model. 3. A differentiable Gauss-Newton method is used to refine the final selected pose $\boldsymbol{\mathcal{\xi}}_3$, thereby acquiring a more accurate pose $\boldsymbol{\mathcal{\xi}}^{*}$.

Wireframe Projection Visualization

This demo shows the localization results of several drone-captured videos, including RGB and Thermal modal.

Citation

@inproceedings{

author = {Juelin Zhu, Shen Yan, Long Wang, Shengyue Zhang, Yu Liu and Maojun Zhang},

title = {LoD-Loc: Visual Localization using LoD 3D Map with Neural Wireframe Alignment},

booktitle = {NeurIPS},

year = {2024},

}

Acknowledgements

LoD-Loc takes the Orienternet as its code backbone. Thanks to Paul-Edouard Sarlin for the open-source release of his excellent work and his PyTorch implementation Orienternet. Thanks to Qi Yan for open-sourcing his excellent work CrossLoc.